Quantum Error Correction Protocol Shows Promise For Reliable Quantum Computing

Insider Brief

- A team of IBM Quantum-led researchers report they established a practical threshold for effective error correction.

- The researcher suggest the discovery puts the protocol on par with the well-established surface code, which has served as a standard for decades.

- Critical Quote: “Our findings bring demonstrations of a low-overhead fault-tolerant quantum memory within the reach of near-term quantum processors.” — from the paper

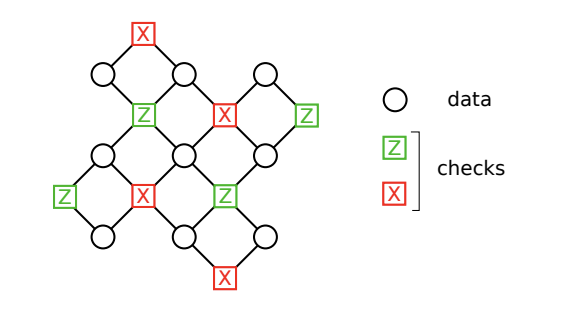

- Image: Tanner graph for the distance-3 surface code

A team of researchers have introduced what could be significant advancement in quantum error correction. In a study posted on the pre-print server ArXiv, the team, which was led by IBM Quantum scientists, report on a protocol that addresses a critical challenge in quantum computing – the susceptibility of quantum bits (qubits) to errors – by establishing a practical threshold for effective error correction.

The study’s key finding lies in achieving an error threshold of 0.8% for quantum error correction. This threshold is crucial as it defines the maximum error rate that a quantum system can endure while maintaining accurate computations, according to the paper. This accomplishment puts the protocol on par with the well-established surface code, which has maintained the highest error threshold for nearly two decades.

The protocol is designed around a family of Low-Density Parity-Check (LDPC) codes, known for their high encoding rate. It employs a thorough process for implementing fault-tolerant memory, involving the measurement of syndrome cycles. These cycles necessitate ancillary qubits and a specific circuit structure consisting of nearest-neighbor controlled-NOT (CNOT) gates. Technically speaking, the connectivity between qubits is structured as a degree-6 graph, composed of two edge-disjoint planar subgraphs. A practical demonstration showcases that the protocol can preserve 12 logical qubits over 10 million syndrome cycles, utilizing only 288 physical qubits with a 0.1% error rate. In contrast, achieving similar error suppression using the surface code would demand over 4000 physical qubits.

The practical implications of this work are significant, particularly for near-term quantum processors. The protocol’s efficiency and compatibility with existing quantum hardware offer a potential pathway to bridging the gap between current quantum computing capabilities and the ultimate goal of fault-tolerant quantum memory.

They write: “Our findings bring demonstrations of a low-overhead fault-tolerant quantum memory within the reach of near-term quantum processors.”

In addition to presenting the protocol, the researchers offer a fresh perspective on fault-tolerant quantum memory. This approach complements existing methods, such as concatenation-based schemes, by focusing on minimizing qubit overhead. While these schemes combine LDPC codes with the surface code for improved error correction, they introduce a substantial qubit overhead that the newly proposed LDPC-based protocol aims to circumvent.

However, the study acknowledges certain challenges in implementing the protocol with existing quantum hardware. These include the development of a low-loss second layer, qubits capable of being coupled to seven connections, and the creation of long-range couplers. The researchers are cautiously optimistic about these challenges, proposing potential solutions like adjusting packaging techniques and enhancing qubit connectivity.

The team includes Sergey Bravyi, Andrew W. Cross, Jay M. Gambetta, Dmitri Maslov, Patrick Rall and Theodore J. Yoder, all of IBM Quantum.