Quantum Computers Show Advantage in Key Particle Physics Calculations

Insider Brief

- Quantinuum and University of Frieburg scientists offer evidence that quantum computers can perform key particle collision calculations faster than traditional supercomputers.

- The study demonstrates a quantum algorithm that breaks complex integrals into sine and cosine terms for more efficient calculations.

- While current quantum hardware has limitations, researchers see long-term potential for reducing computational bottlenecks in particle physics.

Quantum computers can crunch key particle collision calculations faster than traditional supercomputers, researchers report.

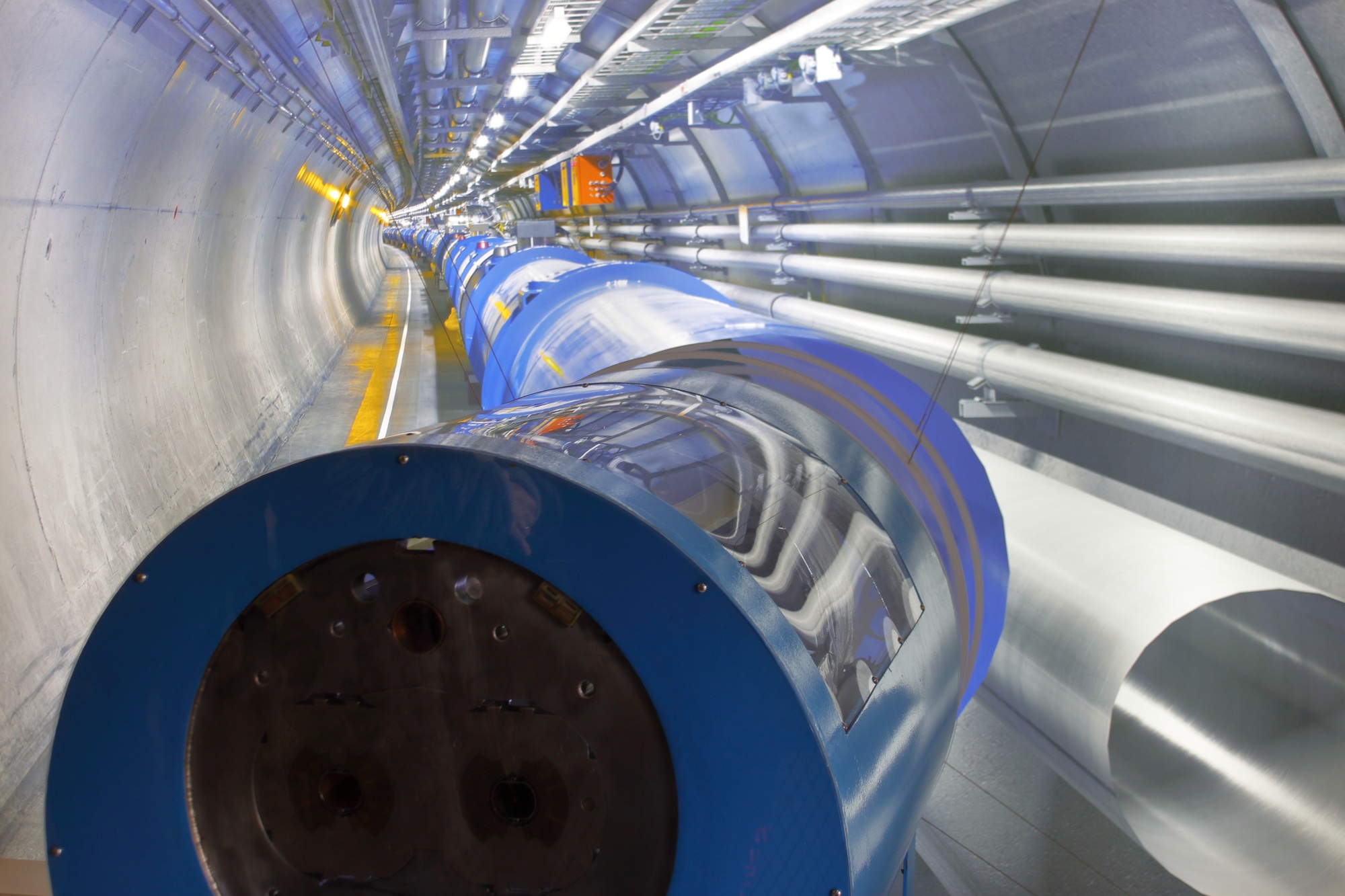

A collaborative study by Quantinuum and the University of Freiburg finds that quantum integration methods outpace classical approaches when computing the “cross sections” that describe how particles scatter in high-energy physics experiments such as those at the Large Hadron Collider (LHC) at CERN. The work, published on the pre-print server arXiv, offers a glimpse into how quantum computing may one day ease the enormous computational demands of particle physics research.

According to the paper, at the heart of high-energy physics lies the concept of a cross section — a measure that indicates the likelihood of certain particles interacting or decaying during collisions. In experiments at facilities like the LHC, billions of collisions generate mountains of data. Theoretical predictions, which are crucial for interpreting this data, depend on calculating cross sections through methods that require extensive numerical integration. These calculations can consume billions of CPU hours each year.

“Generally, scientists run Monte Carlo simulations to make their theoretical predictions. Monte Carlo simulations are currently the biggest computational bottleneck in experimental high-energy physics (HEP), costing enormous CPU resources, which will only grow larger as new experiments come online,” the Quantinuum team writes on a company blog post. “It’s hard to put a specific number on exactly how costly calculations like this are, but we can say that probing fundamental physics at the LHC probably uses roughly 10 billion CPUH/year for data treatment, simulations, and theory predictions. Knowing that the theory predictions represent approximately 15-25% of this total, putting even a 10% dent in this number would be a massive change.”

In their study, researchers Ifan Williams, of Quantinuum and Mathieu Pellen of Universität Freiburg, the University of Freiburg developed a quantum algorithm that uses a technique called Quantum Monte Carlo Integration (QMCI) to perform these integrals more efficiently. QMCI is the quantum counterpart to classical Monte Carlo methods. While classical methods rely on randomly sampling points to approximate an answer — yielding a precision that improves as one over the square root of the number of samples — the quantum method offers a quadratic speed-up. In simple terms, this means that for the same level of accuracy, a quantum computer could use far fewer samples than a classical computer. Quantinuum scientists have previously introduced a full Quantum Monte Carlo Integration (QMCI) engine.

Fourier Quantum Monte Carlo Integration

The researchers’ approach builds on a specific variant of the QMCI algorithm that leverages a Fourier-based method. Known as Fourier Quantum Monte Carlo Integration, this method transforms the problem into one where complicated functions are expressed as a sum of sine and cosine terms.

One way to think of sine and cosine terms is to see them as the basic building blocks of waves. In quantum computing, breaking a complex problem into these wave-like components allows the computer to process and combine them efficiently, making difficult calculations — like predicting particle collisions — more manageable.

Specifically, in this study, the quantum computer can estimate the value of each term more directly and combine them to yield the final result. This step-by-step procedure not only simplifies the calculation but also retains the promised speed advantage.

For example, one of the key calculations in high-energy physics involves simulating the decay of particles — a process central to understanding how fundamental forces interact. In such decay processes, an unstable particle transforms into other particles, and the probability of this happening is encoded in the cross section, which quantifies how likely the interaction is to occur. The study specifically looked at decay processes where one particle decays into three others. The team decomposed the complex integral for the cross section into simpler building blocks, such as monomials (simple power functions) and Breit-Wigner distributions. The latter are used to model the resonance behavior of unstable particles like the W and Z bosons, which mediate the weak nuclear force.

Fewer Samples, Same Precision

In classical simulations, the calculation of these integrals requires sampling millions or billions of points, and even minor improvements in efficiency can lead to significant savings in computational time. By contrast, the quantum algorithm uses fewer samples to achieve the same level of precision. The improvement arises because the quantum method estimates the expectation value—the average outcome of a measurement—with an error that decreases quadratically faster as the number of samples increases. In practice, this means that where a classical algorithm might require a million samples to cut the error in half, a quantum algorithm would need only about a thousand.

The study explains how this happens in accessible terms. First, the quantum computer is programmed to prepare a quantum state that represents a probability distribution. This state is a superposition — a core idea in quantum mechanics — of many possible values at once. Next, the quantum circuit applies a simple function to this state, encoding the value of the integrand (the function to be integrated) into the amplitudes, or weights, of the quantum state. Finally, the quantum computer uses a method known as Quantum Amplitude Estimation (QAE) to extract the expected value, which corresponds to the result of the integration. This procedure avoids some of the heavy arithmetic that would normally bog down the calculation, allowing the quantum computer to remain fast and efficient.

Limitations And Future Directions

However, the researchers are careful to note that their findings come with caveats. The current generation of quantum hardware—the so-called noisy intermediate-scale quantum (NISQ) devices — does not yet have the capacity to run these algorithms at the scale needed for full-scale particle physics simulations. The resource estimates provided in the study, for example, indicate that even for relatively simple calculations, a quantum circuit may require millions of quantum gate operations and qubits that are currently beyond the reach of commercial devices. These challenges, while significant, are not insurmountable. As the field of quantum computing moves toward fault-tolerant machines—devices that can correct their own errors—the researchers expect that the resource requirements will become more manageable.

The study also points out several areas for further research. One of the key limitations is the need to prepare quantum states that accurately represent continuous probability distributions using a finite number of qubits. This state-preparation process introduces systematic errors that must be carefully managed. In their work, the researchers examined different methods for state preparation, including variational techniques (where parameters in the quantum circuit are optimized using classical computers) and Fourier expansion methods. Each has its benefits and drawbacks: while variational methods are efficient for small systems, they struggle with larger numbers of qubits due to issues known as “barren plateaus,” where the optimization landscape becomes too flat. Fourier methods, on the other hand, scale more favorably but require extra qubits for ancillary calculations and may have a lower probability of success without further improvements.

Looking ahead, the team envisions that quantum computing will not only accelerate calculations for particle physics but could also transform other fields that rely on complex integrals. From financial modeling to weather prediction, many scientific domains stand to benefit from the enhanced efficiency of quantum integration. In high-energy physics, specifically, more accurate and faster calculations of cross sections could help researchers test theories and design experiments more effectively.

High-Energy, Big Impact

For now, though, the study serves as a promising proof of concept. However, the long-term applications, if the findings hold up, could be considerable.

While high-energy physics may seem far removed from daily life, its advances often drive real-world innovations. Research in quantum-enhanced particle calculations, like those explored in the Quantinuum and University of Freiburg study, could lead to not just a better understanding of the universe, but could also inspire broader technological shifts.

For example, over the decades, discoveries in particle physics have powered innovations in computing, medicine, and energy. The development of semiconductors, which underpin modern electronics, owes much to quantum mechanics — the same principles guiding today’s quantum computing research. Medical imaging and cancer treatments, such as PET scans and proton therapy, rely on physics first explored in high-energy experiments. More accurate particle simulations could refine these techniques, improving diagnostics and treatment outcomes.

The impact extends beyond healthcare. High-energy physics drives advances in high-performance computing and artificial intelligence — technologies that increasingly shape industries from finance to climate modeling. Understanding fundamental particle interactions also plays a role in nuclear fusion research, a potential clean energy source that could transform global power generation.

Security applications, including radiation detection and national defense technologies, also draw from particle physics. And while abstract, a better grasp of dark matter, antimatter, and the forces governing the universe may one day lead to breakthroughs as profound as quantum mechanics itself.

The paper is quite technical and detailed. For a deeper dive, please see the the study published on arXiv. Researchers use pre-print servers to quickly disseminate their findings for review, however these studies have not been officially peer reviewed.