Quantinuum Unveils First Contribution Toward Responsible AI — Uniting Power of Its Quantum Processors With Experimental Work on Integrating Classical, Quantum Computing

Insider Brief

- A team of researchers at Quantinuum has implemented the first scalable quantum natural language processing (QNLP) model, QDisCoCirc, marking significant progress in the safe integration of quantum computing with AI for text-based tasks such as question answering.

- The QDisCoCirc model introduces enhanced interpretability, allowing researchers to inspect how AI models make decisions, addressing a major challenge in current AI systems, particularly in critical sectors like healthcare and finance.

- The study overcomes key challenges in quantum machine learning, such as the “barren plateau” problem, by using compositional generalization, making large-scale quantum models more efficient and scalable for complex tasks.

A team of Quantinuum researchers has notched further progress in the eventual use of quantum artificial intelligence (AI), reporting the first implementation of scalable quantum natural language processing (QNLP). Their model, called QDisCoCirc, integrates quantum computing with AI to tackle text-based tasks such as question answering, according to a research paper posted on the pre-print server ArXiv.

Ilyas Khan, Founder and Chief Product Officer, Quantinuum, said that while the team has not yet solved a problem at scale, the research marks an important step toward showing how interpretability and transparency could lead to generative AI that is safer and more effective.

“Bob has been working on qNLP for over a decade now, and for the past 6 years I have had the privilege of having a front row seat as we prepare for quantum computers that really start to address real world concerns in a tangible way” said Khan. “Our paper on compositional intelligence that was made public at the start of summer laid down a framework for what it means to be interpretable, and we now have the first experimental implementation of a fully operational system. This is hugely hugely exciting and alongside our other work in areas such as Chemistry, Pharma, Biology, Optimization and Cybersecurity, will start to accelerate towards further scientific discovery in the short term for the quantum sector as a whole”

He added “this is not yet Quantum’s ‘ChatGPT’ moment, but the path to real world relevance is now established and accelerating towards that reality will, in my view, involve the concept of quantum supercomputers.”

Quantum AI, especially the combination of quantum computing and natural language processing (NLP), has been largely theoretical, with limited evidence-based research. According to Khan and Bob Coecke, Chief Scientist, Quantinuum Head of Quantum-Compositional Intelligence, who wrote an article about the project in support of three technical papers that were uploaded to arxiv and also presented in a conference this week, this evidence-based work is aimed at shining a very bright light on the intersection between quantum computing and NLP.

It further demonstrates how quantum systems can be applied to AI tasks in a way that’s more interpretable and scalable than traditional methods.

They write: “At Quantinuum, we have been working on natural language processing (NLP) using quantum computers for some time now. We are excited to have recently carried out experiments which demonstrate not only how it is possible to train a model for a quantum computer, but also how to do this in a way that is interpretable for users. Moreover, and what makes this work even more exciting, we have promising theoretical indications of the usefulness of quantum computers for interpretable NLP.”

The experiment that sits at the heart of the work that has been reported is based on the use of compositional generalization. The technique of compositionality, which is derived from category theory and applied to NLP, involves treating language structures as mathematical objects that can be combined and composed together.

According to Quantinuum “A problem currently inherent to quantum machine learning is that of being able to train at scale. We avoid this by making use of ‘compositional generalization’. This means we train small, on classical computers, and then at test time evaluate much larger examples on a quantum computer. There now exist quantum computers which are impossible to simulate classically and so the scope and ambition of our work can increase very rapidly in the coming period.”

By doing this, the researchers mitigated one of the major challenges in quantum machine learning: the so-called “barren plateau” problem, where training large-scale quantum models becomes inefficient due to vanishing gradients. The study provides tangible and verifiable evidence that quantum systems are not only capable of solving certain types of problems more efficiently but can also make AI models transparent from the point of view of how they make decisions, which is a growing and vital concern in the field of AI.

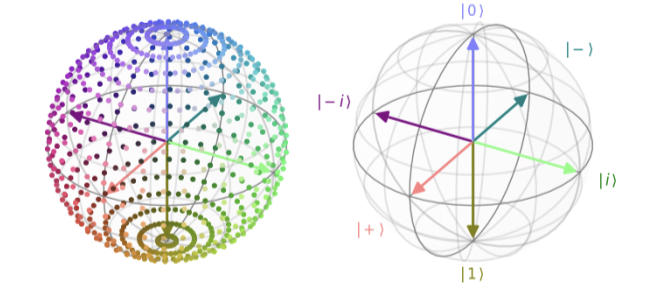

The results were achieved on Quantinuum’s H1-1 trapped-ion quantum processor, which provided the computational power to execute the quantum circuits that form the backbone of the QDisCoCirc model. The QDisCoCirc model leverages the principles of compositionality, a concept borrowed from linguistics and category theory, to break down complex text inputs into smaller, understandable components.

The research team writes in the paper of how important the H1-1 was in the experimental pursuit: “We present experimental results for the task of question answering with QDisCoCirc. This constitutes the first proof-of-concept implementation of scalable compositional QNLP. To demonstrate compositional generalization on a real device beyond instance sizes that we have simulated classically for one of our datasets, we use Quantinuum’s H1-1 trapped-ion quantum processor, which features state-of-the-art two-qubit-gate fidelity.”

Practical Implications

This research has significant implications for the future of AI and quantum computing. One of the most notable outcomes is the potential to use quantum AI for interpretable models. In current large language models (LLMs) like GPT-4, decisions are often made in a “black box” fashion, making it difficult for researchers to understand how or why certain outputs are generated. In contrast, the QDisCoCirc model allows researchers to inspect the internal quantum states and the relationships between different words or sentences, providing insights into the decision-making process.

In practical terms, this could have wide-reaching applications in areas such as question answering systems, also referred to as ‘classification’ challenges, where understanding how a machine reaches a conclusion is as important as the answer itself. By offering an interpretable approach, quantum AI using compositional methods, could be applied in fields like legal, medical, and financial sectors, where accountability and transparency in AI systems are critical.

The study also showed that compositional generalization—the ability of the model to generalize from smaller training sets to larger and more complex inputs—was successful. This could be a key advantage over traditional models like transformers and LSTMs, which, according to the authors and the evidence presented in the technical papers, failed to generalize in the same way when tested on longer or more intricate text samples in this work.

Performance Beyond Classical?

Beyond the immediate application in NLP tasks, the researchers also explored how quantum circuits could outperform classical baselines in certain cases, including the investigation of models like GPT-4. The study’s results showed that classical machine learning models performed no better than random guessing when asked to handle compositional tasks. This suggests that quantum systems, as they scale, could be uniquely suited to handle more complex forms of language processing, especially when scaling up to larger datasets although large scale language models are also likely to improve their performance.

The study found that classical models failed to generalize to larger text instances, performing no better than random guessing, while the quantum circuits demonstrated successful compositional generalization.

The researchers also found that the quantum model displayed resource efficiency. As classical computers struggle to simulate the behavior of quantum systems at larger scales, the study indicates that quantum computers will be necessary for handling large-scale NLP tasks in the future.

“As text circuits grow in size, classical simulations become impractical, highlighting the need for quantum systems to tackle these tasks,” the authors wrote.

Methods and Experimental Setup

The researchers developed datasets featuring simple, binary question-answering tasks as a proof of concept for their QDisCoCirc model. These datasets were designed to explore how well the quantum circuits could handle basic linguistic tasks, such as determining relationships between characters in a story. The team employed parameterized quantum circuits to create word embeddings, which are representations of words in a mathematical space. These embeddings were then used to build larger text circuits, which the quantum processor evaluated.

Coecke and Khan report that the approach allowed the model to remain interpretable while leveraging quantum mechanics’ power. The impact of this will grow as quantum computers become more powerful.

They write: “We embrace ‘compositional interpretability’ as proposed in the article as a solution to the problems that plague current AI. In brief, compositional interpretability boils down to being able to assign a human friendly meaning, such as natural language, to the components of a model, and then being able to understand how they fit together.”

Limitations and Future Directions

While the study marks a significant step forward, it also comes with limitations, according to the team. One challenge is the current scale of quantum processors. While the QDisCoCirc model shows great promise, the researchers report that larger and more complex applications will require quantum computers with more qubits and higher fidelity. The researchers acknowledged that their results are still at a proof-of-concept stage, but they also refer to the rapid acceleration in quantum computers that have now entered what Microsoft refer to as the age of ‘reliable’ quantum computers and IBM refer to as “utility scale” quantum computing.

“Scaling this up to more complex, real-world tasks remains a significant challenge due to current hardware limitations,” they write, although acknowledging that the landscape is moving very quickly.

Additionally, the study focused primarily on binary question answering, a simplified form of NLP. More intricate tasks, such as parsing entire paragraphs or handling multiple layers of context, will be explored in future research and experimentation and are already looking into ways to expand their model to handle more complex text inputs and different types of linguistic structures.

The research team from Quantinuum includes Saskia Bruhn, Gabriel Matos, Tuomas Laakkonen, Anna Pearson, Konstantinos Meichanetzidis and Bob Coecke.

For deeper dives that can offer more technical information than this summary, please see the technical papers available here:

Scalable and interpretable quantum natural language processing: an implementation on trapped ions

Papers on DisCoCirc as a circuit-based model for natural language that turns arbitrary text into text circuits: