ParityQC, University of Innsbruck Physicists Develop Novel Quantum Error Mitigation Method

Insider Brief

- ParityQC and the University of Innsbruck physicists have developed a new strategy to mitigate errors in quantum computers, based on the ParityQC Architecture.

- The team published their paper in Physical Review A.

- Quantum error mitigation refers to a set of techniques and strategies that can reduce or correct errors that naturally occur during quantum computations.

PRESS RELEASE — A group of physicists within ParityQC and the University of Innsbruck have developed a novel strategy to mitigate errors in quantum computers, based on the ParityQC Architecture. The paper outlining the invention has now been published in the journal Physical Review A, as an Editors’ Suggestion, highlighting research that is considered of particular interest, importance, or clarity. This new error mitigation technique exploits the redundant encoding of the ParityQC Architecture to successfully mitigate errors in quantum optimization algorithms. This promising solution aims to tackle the issue of hardware noise and errors which limit the performance of current quantum devices.

One of the key challenges in quantum computing is dealing with errors that occur during computation. In the current generation of quantum computers it is extremely challenging to control qubits effectively, as decoherence and quantum noise can destroy information, leading to errors that can cause significant inaccuracies in the results of the computation. A group of physicists within ParityQC and the University of Innsbruck – Anita Weidinger, Glen Bigan Mbeng and Wolfgang Lechner – have developed a method of error mitigation that allows to reduce the impact of errors in quantum computers and achieve a higher accuracy of results.

The paper outlining this invention, “Error Mitigation for Quantum Approximate Optimization”, has now been published in the prestigious journal Physical Review A as an Editors’ Suggestion. The Editors’ Suggestion mark is reserved for a small number of papers published in Physical Review A that the editors and referees find of particular interest, importance, or clarity.

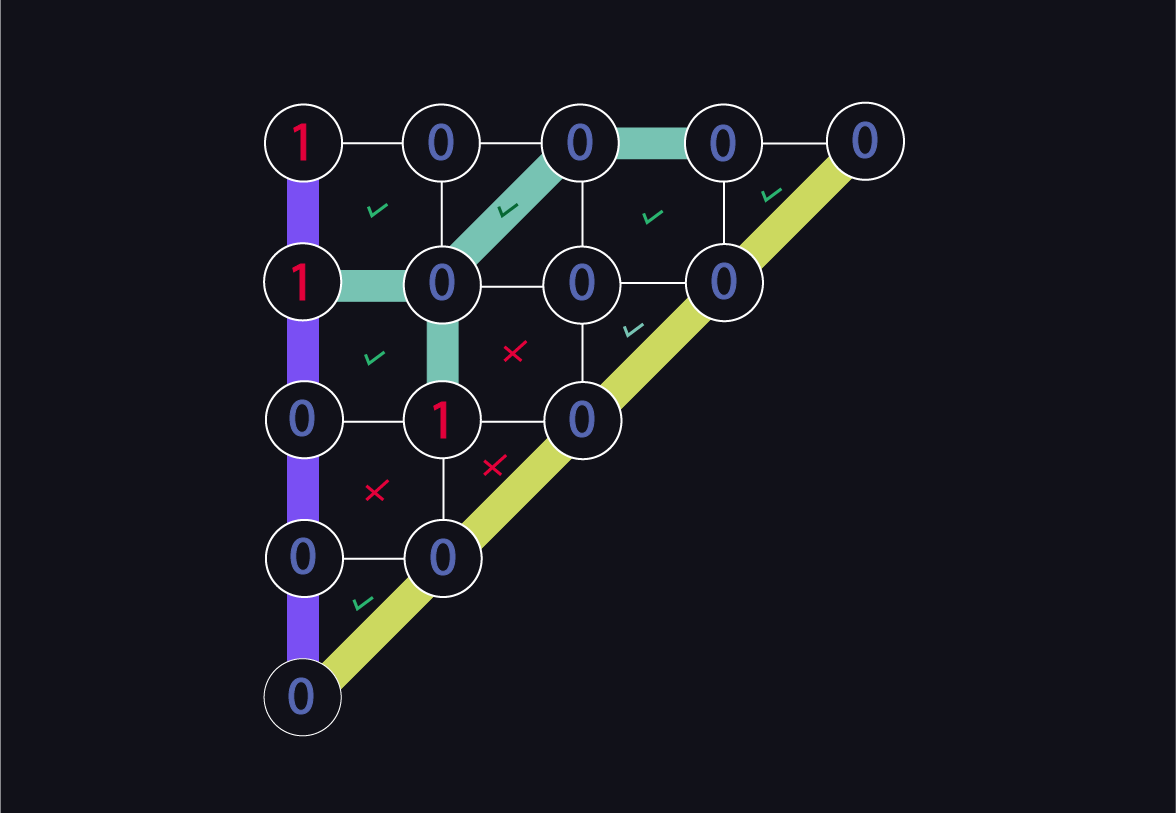

Error mitigation is a crucial topic in the field of quantum computing. Error mitigation techniques allow us to perform more accurate and reliable quantum computations on current quantum devices, which are often noisy and error-prone, by mitigating the negative effects of errors and noise. These techniques usually work by sampling and post-processing the final output of a noisy quantum computation. The newly published paper takes these techniques one step forward, as the authors demonstrate that with the ParityQC Architecture it is possible to efficiently mitigate errors in near-term algorithms. The proposed error mitigation approach is based on the ParityQC Architecture (also known as LHZ Architecture), a novel type of encoding that was discovered in 2015 and is now a patented technology of ParityQC. The Architecture uses a redundant encoding of logical variables to solve optimization problems on quantum chips. The physicists found that this redundancy can be exploited to mitigate errors in quantum optimization algorithms, specifically the Quantum Approximate Optimization Algorithm (QAOA).

The authors demonstrate the effectiveness of the proposed method by applying it to a set of benchmark problems. In the context of QAOA, the paper shows that errors can be significantly mitigated through this new approach, leading to an increased accuracy of the results. As stated by Wolfgang Lechner, co-founder and co-CEO of ParityQC and professor at the

University of Innsbruck: “This method can find meaningful applications in the real world to solve a wide range of optimization problems by significantly improving the performance of QAOA. This can close the gap between the imperfect and “noisy” near-term hardware and fully error-corrected codes.