Parallel Hybrid Network Achieves Better Performance Through Quantum-Classical Collaboration

Insider Brief

- Terra Quantum AG researchers designed and demonstrated a parallel hybrid quantum neural network.

- In a parallel hybrid quantum neural networks. the quantum layer and the classical layer process the same input at the same time and then produce a joint output.

- The training results show that the parallel hybrid network can outperform either its quantum layer or its classical layer.

PRESS RELEASE — Building efficient quantum neural networks is a promising direction for research at the intersection of quantum computing and machine learning. A team at Terra Quantum AG designed a parallel hybrid quantum neural network and demonstrated that their model is “a powerful tool for quantum machine learning.” This research was published recently in Intelligent Computing, a Science Partner Journal.

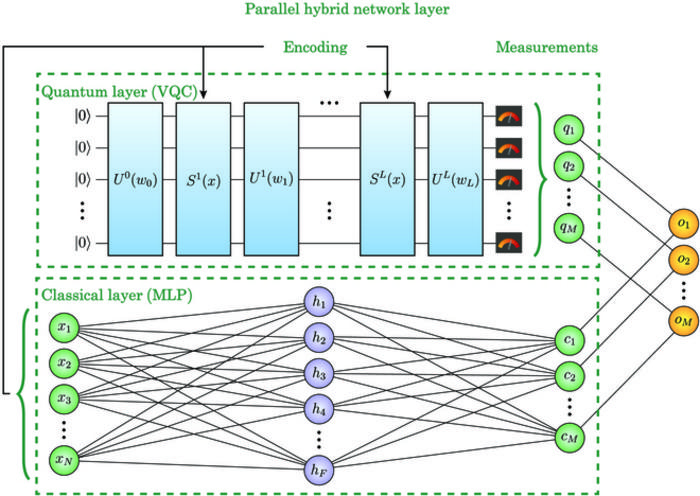

Hybrid quantum neural networks typically consist of both a quantum layer — a variational quantum circuit — and a classical layer — a deep learning neural network called a multi-layered perceptron. This special architecture enables them to learn complicated patterns and relationships from data inputs more easily than traditional machine learning methods.

In this paper, the authors focus on parallel hybrid quantum neural networks. In such networks, the quantum layer and the classical layer process the same input at the same time and then produce a joint output — a linear combination of the outputs from both layers. A parallel network could avoid the information bottleneck that often affects sequential networks, where the quantum layer and the classical layer feed data into each other and process data alternately.

The training results demonstrate that the authors’ parallel hybrid network can outperform either its quantum layer or its classical layer. Trained on two periodic datasets with high-frequency noise added, the hybrid model shows lower training loss, produces better predictions, and is found to be more adaptable to complex problems and new datasets.

The quantum and classical layers both contribute to this effective quantum-classical interplay. The quantum layer, specifically, a variational quantum circuit, maps the smooth periodical parts, while the classical multi-layered perceptron fills in the irregular additions of noise. Both variational quantum circuits and multi-layered perceptrons are considered “universal approximators.” To maximize output during training, variational quantum circuits adjust the parameters of quantum gates that control the status of qubits, and multi-layered perceptrons mainly tune the strength of the connections, or so-called weights, between neurons.

At the same time, the success of a parallel hybrid network rides on the setting and tuning of the learning rate and other hyperparameters, such as the number of layers and number of neurons in each layer in the multi-layered perceptron.

Given that the quantum and classical layers learn at different speeds, the authors discussed how the contribution ratio of each layer affects the performance of the hybrid model and found that adjusting the learning rate is important in keeping a balanced contribution ratio. Therefore, they point out that building a custom learning rate scheduler is a future research direction because such a scheduler could enhance the speed and performance of the hybrid model.