How Can Quantum Computers Make AI Smarter? Quantinuum Researchers Review Quantum Path to Effective, Transparent AI

Insider Brief

- Quantinuum researchers propose using quantum natural language processing (QNLP) to address the inefficiency and opacity of large language models (LLMs), offering solutions that could make AI more interpretable and even more energy-efficient.

- The team’s framework, DisCoCirc, maps text into quantum circuits, enabling models to understand complex relationships in a scalable way, potentially surpassing classical LLMs like ChatGPT in reasoning tasks.

- Quantinuum envisions a future where quantum computers, integrated with AI and high-performance computing in hybrid systems, unlock transformative applications in language processing and beyond.

Quantum computers could address major flaws in artificial intelligence (AI), making it more interpretable and efficient, according to researchers from Quantinuum. In a company blog post, the team introduces a new approach to natural language processing (NLP) by leveraging quantum circuits, a method that may help solve the energy inefficiencies and opacity that plague large language models (LLMs) like ChatGPT.

The Problem with LLMs

LLMs have transformed fields ranging from customer service to creative writing, but their success comes with drawbacks. As Bob Coecke and Ilyas Khan from Quantinuum note in their piece, these systems are often referred to as “black boxes.”

The team writes: “The primary problem with LLMs is that nobody knows how they work — as inscrutable ‘black boxes’ they aren’t ‘interpretable’, meaning we can’t reliably or efficiently control or predict their behavior. This is unacceptable in many situations.”

Additionally, training and running LLMs requires massive computational resources. The energy consumption of these systems is becoming unsustainable, with some models demanding as much energy as a small town to train. As governments and organizations worldwide push for energy-efficient and transparent AI, new solutions are needed.

A Quantum Approach

Quantinuum’s research demonstrates how quantum computers can improve AI by making NLP models interpretable and energy-efficient. Their quantum natural language processing (QNLP) framework, DisCoCirc, transforms text into “text circuits.” These circuits represent how entities in a story interact and evolve over time, using a two-dimensional structure rather than the linear sequence typical of classical text representation.

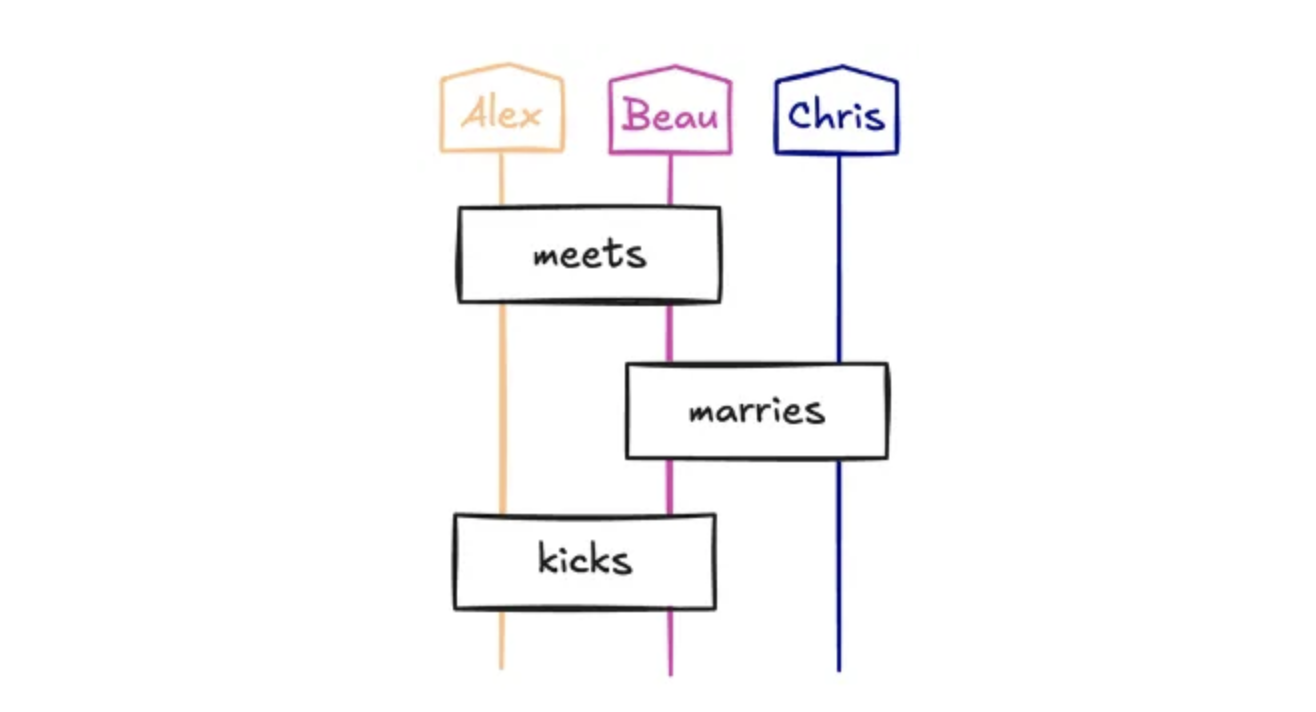

For instance, a simple story like “Alex meets Beau, Beau marries Chris, Alex kicks Beau” is mapped into a network of interactions that quantum computers can process. This structure mirrors the way quantum systems compose mathematically, making them a natural fit for quantum computation.

Compositional Interpretability

A central idea in Quantinuum’s approach is “compositional interpretability.” This involves breaking down a model into components that are understandable in human terms and showing how these parts combine to form the whole.

“In brief, compositional interpretability boils down to being able to assign a human friendly meaning, such as natural language, to the components of a model, and then being able to understand how they fit together,” the researchers write.

According to Coecke and Khan, this property is critical for creating AI systems that users can trust.

Quantum computers, which use quantum states to represent information, offer this interpretability by handling the complex relationships between elements in a way that classical computers struggle to achieve.

Training on Classical, Testing on Quantum

One challenge in quantum machine learning is scaling up models for quantum systems, which are fundamentally different from classical ones. Quantinuum addresses this with “compositional generalization,” a method where small models are trained on classical computers, but larger, more complex tasks are tested on quantum computers.

Their experiments involved teaching a quantum computer to answer questions about stories represented as text circuits. For example, the model was trained to determine which characters in a story were moving in the same direction. This task, while simple for small datasets, becomes exponentially harder for classical computers as the size of the text increases.

Quantinuum’s quantum computer handled these tasks with high accuracy, even when tested on stories far larger than those used in training. This scalability highlights the potential of quantum systems to handle problems that classical systems cannot.

A Real-World Comparison

The researchers compared their quantum-based approach to classical LLMs like ChatGPT. While ChatGPT is effective at generating human-like responses, it struggles with reasoning tasks involving complex relationships, such as those modeled in Quantinuum’s text circuits.

Their findings suggest that quantum NLP could eventually surpass classical methods in certain domains, particularly those requiring interpretability and scalability.

Implications for AI and Beyond

Quantinuum’s research underscores the potential for quantum computing to reshape AI. By addressing issues of interpretability and energy efficiency, quantum NLP could make AI systems more accessible and sustainable.

These advancements are particularly relevant as quantum computers become more powerful. Quantinuum’s roadmap includes the development of universal, fault-tolerant quantum systems within the decade, which could further accelerate innovation in this space.

The team writes that challenges remain. Classical methods may still outperform quantum systems for specific tasks, and proving the superiority of quantum approaches across the board will take time.

However, Khan and Coecke write that they have some powerful tools to help — and the Quantinuum roadmap is leading to quantum supercomputers, or the integration of quantum computers into hybrid systems alongside AI and high-performance computing. By combining the strengths of classical and quantum systems, the researchers hope to unlock new capabilities in AI, paving the way for applications that were previously unimaginable. As this field evolves, the role of quantum computing in AI is likely to grow, offering solutions to some of the most pressing challenges in technology today.

The team writes, “We foresee a period of rapid innovation as powerful quantum computers that cannot be classically simulated become more readily available. This will likely be disruptive, as more and more use cases, including ones that we might not be currently thinking about, come into play,” and add, “Interestingly and intriguingly, we are also pioneering the use of powerful quantum computers in a hybrid system that has been described as a ‘quantum supercomputer’ where quantum computers, HPC and AI work together in an integrated fashion and look forward to using these systems to advance our work in language processing that can help solve the problem with LLM’s that we highlighted at the start of this article.”

For a deeper, more technical dive, along with links to the scientific papers that back this concept up, read the post on the company’s Medium blog.