CERN Interviews John Preskill on The Past, Present And Future of Quantum Science

Insider Brief

- Quantum pioneer John Preskill gave an in-depth interview with CERN, covering everything from his introduction to quantum science to the future of the field and industry.

- Preskill also offered a look at some of the challenges and opportunities of quantum computing.

- He also sees the exploration of quantum computing as a scientific endeavor that give us a better understanding of our world — as well as new technologies.

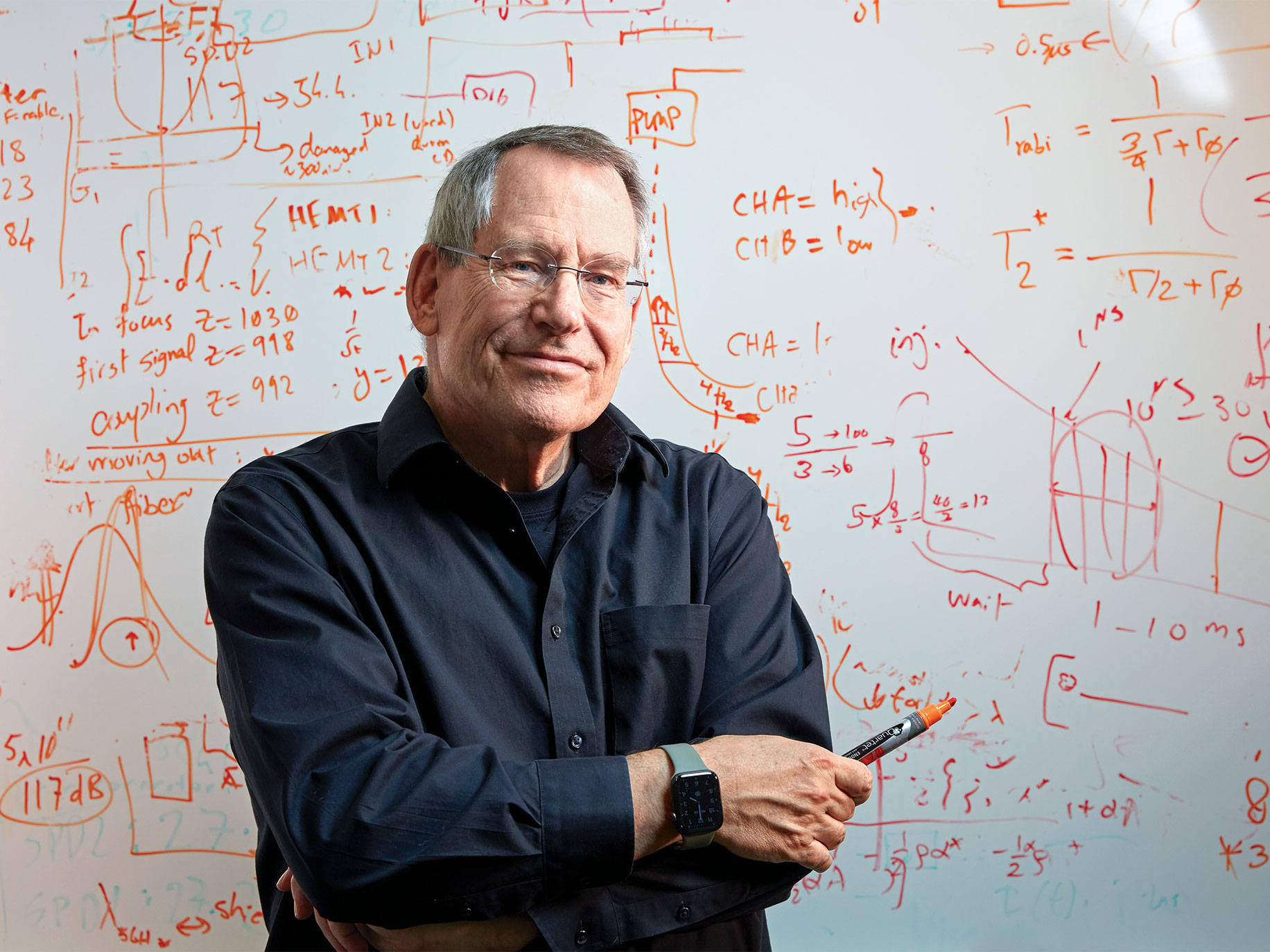

- Image: IQIM Caltech/Gregg Segal

As a professor at Caltech and the director of the Institute for Quantum Information and Matter, John Preskill was a pioneer in research that provided the foundations of today’s quantum industry. With a rich background in particle physics and fundamental physics, he brings a unique perspective to a conversation of quantum tech past, present and — especially — future.

In an exclusive interview with CERN, Preskill expertly covered on the current state of quantum computing, its potential applications, and what the future holds for this rapidly evolving field.

We’ve picked out some highlights, but the complete interview is advised.

The Journey to Quantum Physics

Reflecting on his journey, Preskill said he may have been a little late for investigation into the Standard Model, but he and his colleagues were determined to make an impact.

He told CERN: “You could call it a Eureka moment. My generation of particle theorists came along a bit late to contribute to the formulation of the Standard Model. Our aim was to understand physics beyond the Standard Model. But the cancellation of the Superconducting Super Collider (SSC) in 1993 was a significant setback, delaying opportunities to explore physics at the electroweak scale and beyond. This prompted me to seek other areas of interest.”

He continues, “At the same time, I became intrigued by quantum information while contemplating black holes and the fate of information within them, especially when they evaporate due to Hawking radiation. In 1994, Peter Shor’s algorithm for factoring was discovered, and I learned about it that spring. The idea that quantum physics could solve problems unattainable by classical means was remarkably compelling.”

“I got quite excited right away because the idea that we can solve problems because of quantum physics that we wouldn’t otherwise be able to solve, I thought, was a very remarkable idea. Thus, I delved into quantum information without initially intending it to be a long-term shift, but the field proved rich with fascinating questions. Nearly 30 years later, quantum information remains my central focus.”

Challenging Conventional Computation

Quantum information science and quantum computing challenge conventional understandings of computation, according to Preskill.

“Fundamentally, computer science is about what computations we can perform in the physical universe. The Turing machine model, developed in the 1930s, captures what it means to do computation in a minimal sense. The extended Church-Turing thesis posits that anything efficiently computable in the physical world can be efficiently computed by a Turing machine. However, quantum computing suggests a need to revise this thesis because Turing machines can’t efficiently model the evolution of complex, highly entangled quantum systems. We now hypothesize that the quantum computing model better captures efficient computation in the universe. This represents a revolutionary shift in our understanding of computation, emphasizing that truly understanding computation involves exploring quantum physics.”

Impacting Other Scientific Fields

Preskill sees quantum information science profoundly impacting other scientific fields in the coming decades. “From the beginning, what fascinated me about quantum information wasn’t just the technology, though that’s certainly important and we’re developing and using these technologies. More fundamentally, it offers a powerful new way of thinking about nature. Quantum information provides us with perspectives and tools for understanding highly entangled systems, which are challenging to simulate with conventional computers.”

He adds, “The most significant conceptual impacts have been in the study of quantum matter and quantum gravity. In condensed matter physics, we now classify quantum phases of matter using concepts like quantum complexity and quantum error correction. Quantum complexity considers how difficult it is to create a many-particle or many-qubit state using a quantum computer. Some quantum states require a number of computation steps that grow with system size, while others can be created in a fixed number of steps, regardless of system size. This distinction is fundamental for differentiating phases of matter.”

Bridging Theory and Experiment

Addressing the relationship between theoretical advancements in quantum algorithms and their practical implementation, Preskill said: “The interaction between theory and experiment is vital in all fields of physics. Since the mid-1990s, there’s been a close relationship between theory and experiment in quantum information. Initially, the gap between theoretical algorithms and hardware was enormous. Yet, from the moment Shor’s algorithm was discovered, experimentalists began building hardware, albeit at first on a tiny scale. After nearly 30 years, we’ve reached a point where hardware can perform scientifically interesting tasks.”

He added: “For significant practical impact, we need quantum error correction due to noisy hardware. This involves a large overhead in physical qubits, requiring more efficient error correction techniques and hardware approaches. We’re in an era of co-design, where theory and experiment guide each other. Theoretical advancements inform experimental designs, while practical implementations inspire new theoretical developments.”

The State of Qubits Today

Discussing the current state of qubits in today’s quantum computers, Preskill commented, “Today’s quantum computers based on superconducting electrical circuits have up to a few hundred qubits. However, noise remains a significant issue, with error rates only slightly better than 1% per two-qubit gate, making it challenging to utilize all these qubits effectively.”

“Additionally, neutral atom systems held in optical tweezers are advancing rapidly. At Caltech, a group recently built a system with over 6,000 qubits, although it’s not yet capable of computation. These platforms weren’t considered competitive five to ten years ago but have advanced swiftly due to theoretical and technological innovations.”

Advancing Quantum Computing

Preskill offered an overview of neutral atom and superconducting systems in the interview.

“In neutral atom systems, the qubits are atoms, with quantum information encoded in either their ground state or a highly excited state, creating an effective two-level system. These atoms are held in place by optical tweezers, which are finely focused laser beams. By rapidly reconfiguring these tweezers, we can make different atoms interact with each other. When atoms are in their highly excited states, they have large dipole moments, allowing us to perform two-qubit gates. By changing the positions of the qubits, we can facilitate interactions between different pairs.”

“In superconducting circuits, qubits are fabricated on a chip. These systems use Josephson junctions, where Cooper pairs tunnel across the junction, introducing nonlinearity into the circuit. This nonlinearity allows us to encode quantum information in either the lowest energy state or the first excited state of the circuit. The energy splitting of the second excited state is different from the first, enabling precise manipulation of just those two levels without inadvertently exciting higher levels. This behavior makes them function effectively as qubits, as two-level quantum systems.”

Scaling Up Quantum Systems

As research teams scale up from a few hundred to a thousand qubits, Preskill said there will be challenges and a need for constant innovation.

He said: “A similar architecture might work for a thousand qubits. But as the number of qubits continues to increase, we’ll eventually need a modular design. There’s a limit to how many qubits fit on a single chip or in a trap. Future architectures will require modules with interconnectivity, whether chip-to-chip or optical interconnects between atomic traps.”

Error Correction Algorithms

Unlike classical computing, which requires relatively minimal need for error correction, the sensitivity and intricacy of quantum states represents a formidable hurdle for error correction. Preskill should know a thing or two about error correction — he’s credited with naming the present era of quantum computing as Noisy Intermediate Scale Quantum, or NISQ.

Preskill offers a unique way of describing that noise — and the mechanics behind these error-correction algorithms in quantum computers, adding, “Think of it as software. Error correction in quantum computing is essentially a procedure akin to cooling. The goal is to remove entropy introduced by noise. This is achieved by processing and measuring the qubits, then resetting the qubits after they are measured. The process of measuring and resetting reduces disorder caused by noise.”

“The process is implemented through a circuit. A quantum computer can perform operations on pairs of qubits, creating entanglement. In principle, any computation can be built up using two-qubit gates. However, the system must also be capable of measuring qubits during the computation. There will be many rounds of error correction, each involving qubit measurements. These measurements identify errors without interfering with the computation, allowing the process to continue.”

Lessons from Quantum Computing

As scientists learn about quantum computing, those lessons reverberate across not just quantum science, but other fields of physics, according to Preskill.

When asked if progress in quantum computing teaches us anything new about quantum physics at the fundamental level, Preskill said, “This question is close to my heart because I started out in high-energy physics, drawn by its potential to answer the most fundamental questions about nature. However, what we’ve learned from quantum computing aligns more with the challenges in condensed matter physics. As Phil Anderson famously said, ‘more is different.’ When you have many particles interacting strongly quantum mechanically, they become highly entangled and exhibit surprising behaviors.”

“Studying these quantum devices has significantly advanced our understanding of entanglement. We’ve discovered that quantum systems can be extremely complex, difficult to simulate, and yet robust in certain ways. For instance, we’ve learned about quantum error correction, which protects quantum information from errors.”

While quantum computing advancements provide new insights into quantum mechanics, Preskill emphasizes that these insights pertain more to how quantum mechanics operates in complex systems rather than foundational aspects of quantum mechanics itself.

This understanding is crucial because it could lead to new technologies and innovative ways of comprehending the world around us.