Breaking The Surface: Google Demonstrates Error Correction Below Surface Code Threshold

Insider Brief

- Google Quantum AI demonstrated a quantum memory system that significantly reduced error rates, operating below the critical threshold for effective quantum error correction.

- The system’s logical qubit outlasted its best physical qubit by more than double, showing enhanced stability and reliability through advanced error correction protocols.

- The study highlights that achieving even lower error rates would require a substantial increase in qubits, indicating the challenges and potential for scaling up quantum computing systems.

Google Quantum AI-led team of researchers report that they achieved a significant advance in quantum error correction (QEC), a vital technology for the development of practical quantum computing. In a recent paper, published on the preprint server ArXiv, the researchers describe how they have achieved error rates below the critical threshold necessary for effective quantum error correction.

This achievement marks a critical step toward scalable, fault-tolerant quantum computing, according to the paper.

Quantum error correction is essential for quantum computing because quantum information is highly susceptible to errors due to environmental noise and imperfect quantum operations. The goal of QEC is to protect quantum information by encoding it across multiple physical qubits to form a more stable logical qubit. However, QEC only becomes effective when the error rate of physical qubits is below a specific threshold. Until now, achieving this level of performance in a working quantum system has remained elusive.

In their latest work, Google’s team, led by Julian Kelly, Director of Quantum Hardware at Google Quantum AI, demonstrated a quantum memory that operates below this threshold on a superconducting quantum computer, a milestone that many in the field had considered a significant hurdle to using quantum computers for calculations that can best today’s classical computers.

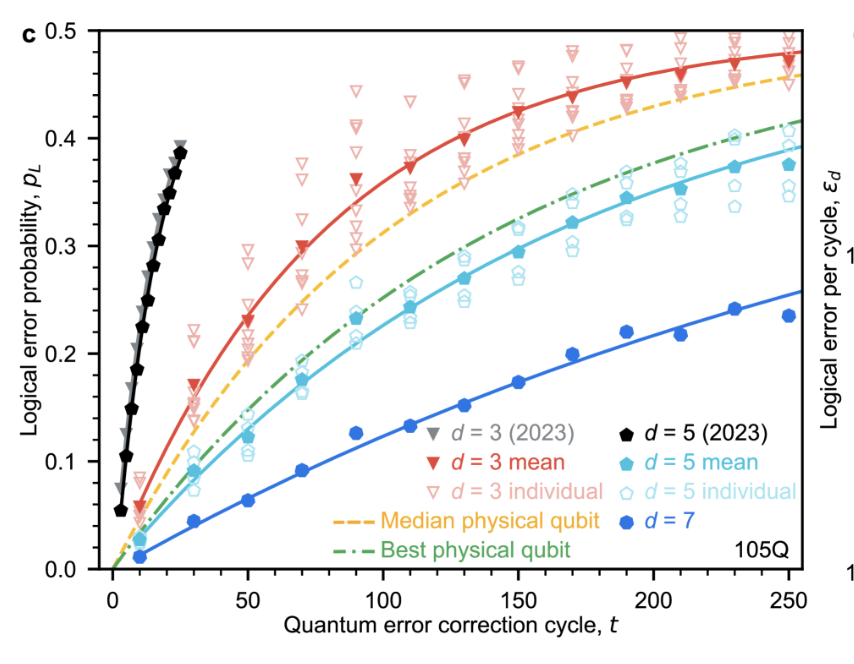

“We show our systems operating below the threshold by measuring that the error rate of a logical qubit more than halves between a code distance of 3 to 5, and again from 5 to 7, to an error-per-cycle of 0.143% with 101 physical qubits,” Kelly wrote in a LinkedIn post announcing the paper.

The study introduces two surface code memories, specifically a distance-7 code and a distance-5 code, integrated with a real-time decoder. These distance codes refer to methods of protecting quantum information, where the larger number (7) indicates stronger protection against errors compared to the smaller number (5)

The distance-7 code, which involves 101 physical qubits, achieved an error rate of 0.143% per cycle of error correction, marking the first time a quantum processor has definitively shown below-threshold performance. This means that the error suppression scales exponentially with the size of the code, a crucial feature for future quantum computing systems.

The team writes in the paper: “The logical error rate of our larger quantum memory is suppressed by a factor of Λ = 2.14 ± 0.02 when increasing the code distance by two, culminating in a 101-qubit distance-7 code with 0.143% ± 0.003% error per cycle of error correction”.

Essentially, this means that as they increased the level of protection, the error rate dropped considerably, which is a crucial step toward making quantum computers practical and effective.

Google’s experiment also demonstrated the real-time decoding of quantum errors, which is vital for running complex quantum algorithms. The researchers achieved an average decoder latency of 63 microseconds at a code distance of 5, keeping pace with the system’s cycle time of 1.1 microseconds. This performance was sustained over up to a million cycles, showing stability crucial for long-duration quantum computations.

One of the more important aspects of the research was the longevity of the logical qubit compared to its constituent physical qubits. The logical qubit’s lifetime exceeded that of the best physical qubit — often referred to as break-even — by a factor of 2.4, further proving that the error correction protocols were effective.

The team writes: “This logical memory is also beyond break-even, exceeding its best physical qubit’s lifetime by a factor of 2.4 ± 0.3.”

Challenges And Future Directions

This progress did not come without challenges. The study identified that logical performance is limited by rare correlated error events, which occurred approximately once every hour or every three billion cycles. These errors, though infrequent, represent a hurdle to achieving the ultra-low error rates required for practical quantum computing.

The researchers implemented high-distance repetition codes up to distance-29 to investigate these errors, marking an important step in understanding and mitigating such issues.

“Our results present device performance that, if scaled, could realize the operational requirements of large-scale fault-tolerant quantum algorithms,” the paper concludes .

Google’s advance, then, isn’t just a technical milestone but could also serve as a critical proof point in the broader quest to build practical quantum computers capable of solving problems that are intractable for classical systems. Achieving below-threshold error rates paves the way for more complex and longer quantum computations, moving the field closer to realizing the full potential of quantum technology.

While the results are promising, significant work remains. The researchers acknowledge that scaling up the current system to handle more qubits and lower error rates will be resource-intensive and bring additional challenges. For example, achieving a logical qubit with an error rate of 10−6 (ten to the negative six) would require a distance-27 code, using 1,457 physical qubits—a considerable increase from the current 101. Additionally, as the system scales, the classical coprocessors responsible for decoding quantum errors in real-time will face increased demands, further complicating the challenge.

Despite these challenges, the exponential improvement in logical error rates as the code distance increases offers a path forward. Reducing physical error rates by half could lead to a four-order-of-magnitude improvement in logical performance, potentially bringing quantum computers closer to the error rates needed for real-world applications, according to the researchers.

Future advances in error correction protocols and decoding algorithms are expected to reduce the overheads associated with these systems, making them more practical for large-scale use.

The research team also included members from Google Research, the University of Massachusetts Amherst, Google DeepMind, the University of California Santa Barbara, the University of Connecticut, Auburn University, ETH Zurich, the Massachusetts Institute of Technology, the University of California Riverside, and Yale University.

For a deeper dive into the advance and a more technical explanation, please review the paper on ArXiv.